2019

How to read the neural code. — What are the principles underlying the computations performed by neuronal networks? Answering this question is central to neuroscience and underlies both the characterization of mental diseases and devices establishing interactions between humans and machines through neuronal signals. The work at the Computational systems neuroscience group investigated how single neuron responses and joint responses of pairs of neurons provide insights in this fundamental question.

Representational untangling in the visual cortex. — The visual cortex in the brain contributes to the interpretation of stimuli detected by our retinae. In order to interpret the environment subsequent stages of processing need a code that is easy to read, similar to the easily readable message we receive on our phone that we happily read as opposed to the gibberish sequence of zeros and ones that is communicated by our phone to the communication tower. The magic happening between these two codes is called nonlinear transformation. Such nonlinear transformations are certainly taken place in the brain but what these are and how these contribute to an easily readable code is a subject of debate.

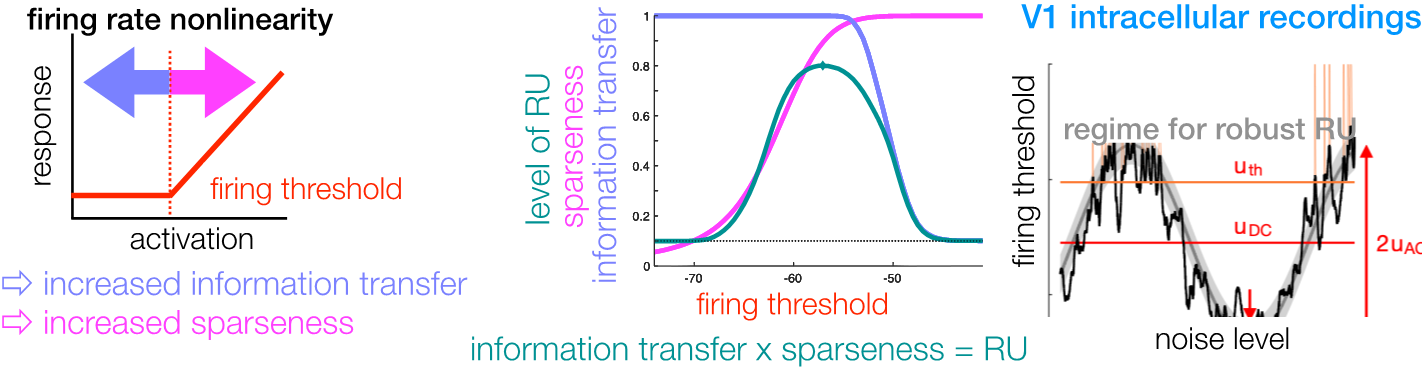

In a collaboration with Peyman Golshani (UCLA), Máté Lengyel (University of Cambridge), and Pierre-Olivier Polack (Ruthgers University) we investigated the role of the most basic but most widespread form of nonlinearity, the so called firing rate nonlinearity, which determines how the level of membrane potential of the neuron is transformed into the intensity of action potential generation. We showed that a key characteristics of the nonlinearity, the threshold above which neurons start to generate action potentials, controls the readability of the neural code (Figure 1). We pointed out that the threshold controls two opposing effects. Lowered threshold results in increased information transfer, while elevated threshold contributes to increased sparseness of the code, meaning that responses in a population become more selective to specific stimuli, thus contributing to an easy to read dictionary. The result of the two opposing effects is that the best readable code corresponds to a sweet spot where both of these effects can exert their benefit. This is where the so-called representational untangling, the magic of nonlinear computation appears. Using recordings from neurons in mice we could demonstrate that the properties of recorded neurons is optimized for representational untangling, that is, an easy to read code.

Generative computations in the visual cortex. — Machine vision, and in particular so-called deep discriminative models, which are optimized to perform image categorization, have proven effective in describing how neurons respond to complex stimuli. Theoretical considerations, however, indicate that computations optimized to perform a single task do not reflect the flexibility of the nervous system, that is, its capability to build up representations serving for multiple tasks. Inspired by this insight, we designed an experiment that can demonstrate the presence of stimulus-dependent correlations, a hallmark of another class of machine vision models, generative models. As opposed to deep discriminative

Figure 1. Left: Mapping of membrane potential activation to the intensity of action potential generation. Arrows denote potential changes in firing threshold. Middle: Changes in the fidelity of information transfer and sparseness as firing threshold is changed. Representational untangling (RU) is a result of the combined effect of information transfer and sparseness. Right: the parameter regime where RU is most effective (gray area) and measured properties of neurons in the visual cortex of mice. Overlap between the two indicates that neurons are optimized to achieve RU.

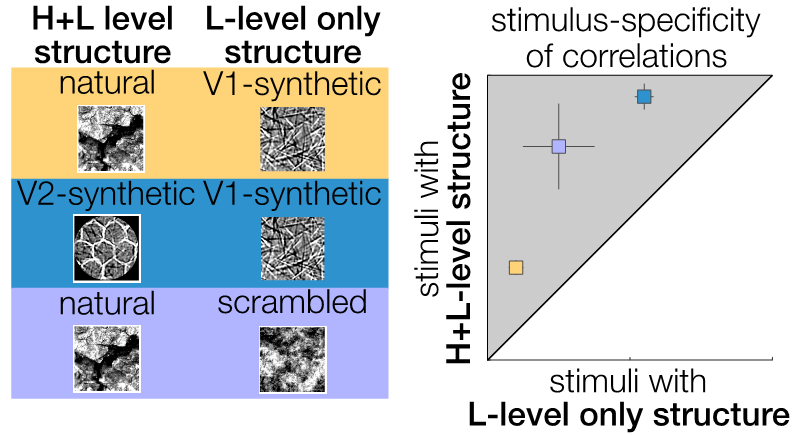

models, deep generative models build up a task-independent representation of the environment. Based on collaborations with Wolf Singer (MPI/ESI Frankfurt) we designed a set of behaving macaque experiments that could identify these hallmarks. Our results are based on the prediction that stimulus statistics affects the level of stimulus specificity of correlated variability between neurons (Figure 2). Critically, these results resolve a long standing puzzle in neuroscience. Traditional models, such as the models underlying deep discriminative models regard correlations as detrimental for efficient encoding of stimulus identity but these correlations are ubiquitous in the nervous system. Our results show that these correlations are a consequence of optimal computations.

Figure 2. Stimulus-specificity of correlations. Deep generative models predict that images featuring high-level structure (left) display higher levels of stimulus-specificity in correlated variability than images only characterized by low-level structure (right).

External links:

[1] https://doi.org/10.1016/j.conb.2019.09.002

[2] https://doi.org/10.7554/eLife.43625

[3] https://doi.org/10.1073/pnas.1816766116